6.3. Land Cover Classification using Convolutional Neural Networks (CNNs)#

This notebook is converted from the source: https://www.coursera.org/learn/getting-started-with-tensor-flow2

6.3.1. Exercise Instruction#

By the end of this notebook, you’ll be able to 😃😃😃

Construct CNNs that classifies EuroSAT images into one of its 10 classes;

Save and load trained models;

Explore ways to improve the model performance.

Land Cover Classification aims to automatically provide labels describing the represented physical land type or how a land area is used (e.g., residential, industrial).

Convolutional Neural Networks (CNNs), the state of-the-art image classification method in computer vision and machine learning, have been reported to be suitable for the classification of remotely sensed images.

However, the classification of remotely sensed images is a challenging task, particularly due to the lack of reliably labeled ground truth datasets.

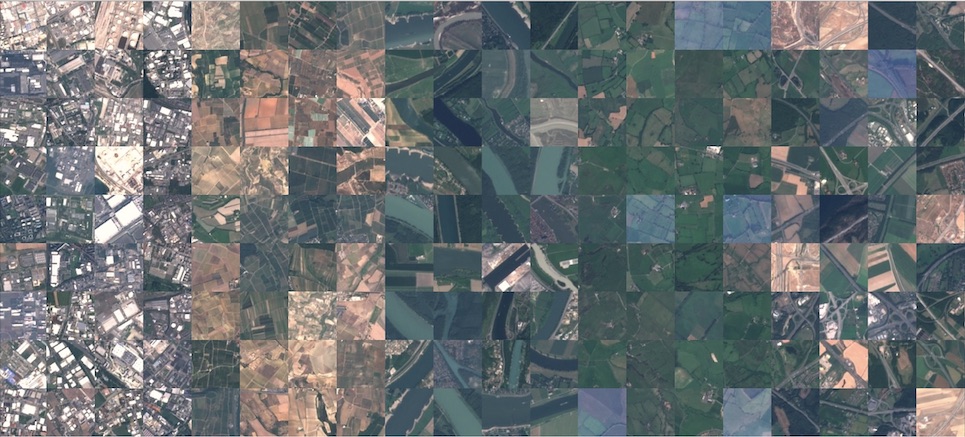

The EuroSAT dataset provides large quantity of training data for this purpose. It consists of 27000 labelled Sentinel-2 satellite images of different land uses: residential, industrial, highway, river, forest, pasture, herbaceous vegetation, annual crop, permanent crop and sea/lake.

For a reference, see the following papers:

Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. Patrick Helber, Benjamin Bischke, Andreas Dengel, Damian Borth. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2019.

Introducing EuroSAT: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. Patrick Helber, Benjamin Bischke, Andreas Dengel. 2018 IEEE International Geoscience and Remote Sensing Symposium, 2018.

⚡⚡⚡ You can create your own code by following each question or complete the code with blanks.

# Run this cell first to import all required packages.

import tensorflow as tf

from tensorflow.keras.preprocessing.image import load_img, img_to_array

from tensorflow.keras.models import Sequential, load_model

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPooling2D, Dropout

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

from keras.utils import plot_model

from sklearn.model_selection import train_test_split

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# If you would like to make further imports from tensorflow, add them here

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

Cell In[1], line 2

1 # Run this cell first to import all required packages.

----> 2 import tensorflow as tf

3 from tensorflow.keras.preprocessing.image import load_img, img_to_array

4 from tensorflow.keras.models import Sequential, load_model

File ~\.conda\envs\JB\lib\site-packages\tensorflow\__init__.py:37

34 import sys as _sys

35 import typing as _typing

---> 37 from tensorflow.python.tools import module_util as _module_util

38 from tensorflow.python.util.lazy_loader import LazyLoader as _LazyLoader

40 # Make sure code inside the TensorFlow codebase can use tf2.enabled() at import.

File ~\.conda\envs\JB\lib\site-packages\tensorflow\python\__init__.py:37

29 # We aim to keep this file minimal and ideally remove completely.

30 # If you are adding a new file with @tf_export decorators,

31 # import it in modules_with_exports.py instead.

32

33 # go/tf-wildcard-import

34 # pylint: disable=wildcard-import,g-bad-import-order,g-import-not-at-top

36 from tensorflow.python import pywrap_tensorflow as _pywrap_tensorflow

---> 37 from tensorflow.python.eager import context

39 # pylint: enable=wildcard-import

40

41 # Bring in subpackages.

42 from tensorflow.python import data

File ~\.conda\envs\JB\lib\site-packages\tensorflow\python\eager\context.py:29

26 import numpy as np

27 import six

---> 29 from tensorflow.core.framework import function_pb2

30 from tensorflow.core.protobuf import config_pb2

31 from tensorflow.core.protobuf import coordination_config_pb2

File ~\.conda\envs\JB\lib\site-packages\tensorflow\core\framework\function_pb2.py:16

11 # @@protoc_insertion_point(imports)

13 _sym_db = _symbol_database.Default()

---> 16 from tensorflow.core.framework import attr_value_pb2 as tensorflow_dot_core_dot_framework_dot_attr__value__pb2

17 from tensorflow.core.framework import node_def_pb2 as tensorflow_dot_core_dot_framework_dot_node__def__pb2

18 from tensorflow.core.framework import op_def_pb2 as tensorflow_dot_core_dot_framework_dot_op__def__pb2

File ~\.conda\envs\JB\lib\site-packages\tensorflow\core\framework\attr_value_pb2.py:16

11 # @@protoc_insertion_point(imports)

13 _sym_db = _symbol_database.Default()

---> 16 from tensorflow.core.framework import tensor_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__pb2

17 from tensorflow.core.framework import tensor_shape_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__shape__pb2

18 from tensorflow.core.framework import types_pb2 as tensorflow_dot_core_dot_framework_dot_types__pb2

File ~\.conda\envs\JB\lib\site-packages\tensorflow\core\framework\tensor_pb2.py:16

11 # @@protoc_insertion_point(imports)

13 _sym_db = _symbol_database.Default()

---> 16 from tensorflow.core.framework import resource_handle_pb2 as tensorflow_dot_core_dot_framework_dot_resource__handle__pb2

17 from tensorflow.core.framework import tensor_shape_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__shape__pb2

18 from tensorflow.core.framework import types_pb2 as tensorflow_dot_core_dot_framework_dot_types__pb2

File ~\.conda\envs\JB\lib\site-packages\tensorflow\core\framework\resource_handle_pb2.py:16

11 # @@protoc_insertion_point(imports)

13 _sym_db = _symbol_database.Default()

---> 16 from tensorflow.core.framework import tensor_shape_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__shape__pb2

17 from tensorflow.core.framework import types_pb2 as tensorflow_dot_core_dot_framework_dot_types__pb2

20 DESCRIPTOR = _descriptor.FileDescriptor(

21 name='tensorflow/core/framework/resource_handle.proto',

22 package='tensorflow',

(...)

26 ,

27 dependencies=[tensorflow_dot_core_dot_framework_dot_tensor__shape__pb2.DESCRIPTOR,tensorflow_dot_core_dot_framework_dot_types__pb2.DESCRIPTOR,])

File ~\.conda\envs\JB\lib\site-packages\tensorflow\core\framework\tensor_shape_pb2.py:36

13 _sym_db = _symbol_database.Default()

18 DESCRIPTOR = _descriptor.FileDescriptor(

19 name='tensorflow/core/framework/tensor_shape.proto',

20 package='tensorflow',

(...)

23 serialized_pb=_b('\n,tensorflow/core/framework/tensor_shape.proto\x12\ntensorflow\"z\n\x10TensorShapeProto\x12-\n\x03\x64im\x18\x02 \x03(\x0b\x32 .tensorflow.TensorShapeProto.Dim\x12\x14\n\x0cunknown_rank\x18\x03 \x01(\x08\x1a!\n\x03\x44im\x12\x0c\n\x04size\x18\x01 \x01(\x03\x12\x0c\n\x04name\x18\x02 \x01(\tB\x87\x01\n\x18org.tensorflow.frameworkB\x11TensorShapeProtosP\x01ZSgithub.com/tensorflow/tensorflow/tensorflow/go/core/framework/tensor_shape_go_proto\xf8\x01\x01\x62\x06proto3')

24 )

29 _TENSORSHAPEPROTO_DIM = _descriptor.Descriptor(

30 name='Dim',

31 full_name='tensorflow.TensorShapeProto.Dim',

32 filename=None,

33 file=DESCRIPTOR,

34 containing_type=None,

35 fields=[

---> 36 _descriptor.FieldDescriptor(

37 name='size', full_name='tensorflow.TensorShapeProto.Dim.size', index=0,

38 number=1, type=3, cpp_type=2, label=1,

39 has_default_value=False, default_value=0,

40 message_type=None, enum_type=None, containing_type=None,

41 is_extension=False, extension_scope=None,

42 serialized_options=None, file=DESCRIPTOR),

43 _descriptor.FieldDescriptor(

44 name='name', full_name='tensorflow.TensorShapeProto.Dim.name', index=1,

45 number=2, type=9, cpp_type=9, label=1,

46 has_default_value=False, default_value=_b("").decode('utf-8'),

47 message_type=None, enum_type=None, containing_type=None,

48 is_extension=False, extension_scope=None,

49 serialized_options=None, file=DESCRIPTOR),

50 ],

51 extensions=[

52 ],

53 nested_types=[],

54 enum_types=[

55 ],

56 serialized_options=None,

57 is_extendable=False,

58 syntax='proto3',

59 extension_ranges=[],

60 oneofs=[

61 ],

62 serialized_start=149,

63 serialized_end=182,

64 )

66 _TENSORSHAPEPROTO = _descriptor.Descriptor(

67 name='TensorShapeProto',

68 full_name='tensorflow.TensorShapeProto',

(...)

100 serialized_end=182,

101 )

103 _TENSORSHAPEPROTO_DIM.containing_type = _TENSORSHAPEPROTO

File ~\.conda\envs\JB\lib\site-packages\google\protobuf\descriptor.py:621, in FieldDescriptor.__new__(cls, name, full_name, index, number, type, cpp_type, label, default_value, message_type, enum_type, containing_type, is_extension, extension_scope, options, serialized_options, has_default_value, containing_oneof, json_name, file, create_key)

615 def __new__(cls, name, full_name, index, number, type, cpp_type, label,

616 default_value, message_type, enum_type, containing_type,

617 is_extension, extension_scope, options=None,

618 serialized_options=None,

619 has_default_value=True, containing_oneof=None, json_name=None,

620 file=None, create_key=None): # pylint: disable=redefined-builtin

--> 621 _message.Message._CheckCalledFromGeneratedFile()

622 if is_extension:

623 return _message.default_pool.FindExtensionByName(full_name)

TypeError: Descriptors cannot be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

1. Downgrade the protobuf package to 3.20.x or lower.

2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates

6.3.2. Data Setup#

Using the EuroSAT dataset which consists of 27000 images and labels might crash colab due to limited RAM, thus we use a smaller subset of the original dataset - 4000 training images and 1000 testing images with roughly equal numbers of each class.

import pooch

data_url = 'https://unils-my.sharepoint.com/:u:/g/personal/tom_beucler_unil_ch/EUB7KobuofVIs9kbBlPsh8wByNhXgThqpzijsFCViy9wHw?download=1'

hash = 'acb7d0a0f5c74d69ade3c27777f1c150cfe03781b51925642381983b137bb61c'

data = pooch.retrieve(data_url, known_hash=hash, processor=pooch.Unzip())

# Import the Eurosat data

def load_eurosat_data():

data_dir = '/root/.cache/pooch/a03aa179d211d203a83480995708754e-EUB7KobuofVIs9kbBlPsh8wByNhXgThqpzijsFCViy9wHw.unzip/data_cnn'

x_train = np.load(os.path.join(data_dir, 'x_train.npy'))

y_train = np.load(os.path.join(data_dir, 'y_train.npy'))

x_val = np.load(os.path.join(data_dir, 'x_test.npy'))

y_val = np.load(os.path.join(data_dir, 'y_test.npy'))

return (x_train, y_train), (x_val, y_val)

(X_train, y_train), (X_val_test, y_val_test) = load_eurosat_data()

X_val, X_test, y_val, y_test = train_test_split(X_val_test, y_val_test, test_size=0.5, random_state=42)

#Normalize data

X_train = X_train / 255.0

X_val = X_val / 255.0

X_test = X_test / 255.0

6.3.3. Q1 Build a CNN model1 to classify Eurosat data.#

Let’s construct a CNN called ‘model1’ using the Sequential API, according to the following specifications:

The model should use the input_shape in the function argument to set the input size in the first layer.

The first layer should be a Conv2D layer with 16 filters, a 3x3 kernel size, a ReLU activation function and ‘SAME’ padding. Name this layer ‘conv_1’.

The second layer should also be a Conv2D layer with 8 filters, a 3x3 kernel size, a ReLU activation function and ‘SAME’ padding. Name this layer ‘conv_2’.

The third layer should be a MaxPooling2D layer with a pooling window size of 8x8. Name this layer ‘pool_1’.

The fourth layer should be a Flatten layer, named ‘flatten’.

The fifth layer should be a Dense layer with 32 units, a ReLU activation. Name this layer ‘dense_1’.

The sixth and final layer should be a Dense layer with 10 units and softmax activation. Name this layer ‘dense_2’.

In total, the network should have 6 layers.

# Initiate an empty Sequential model

model1 = ____()

# Assign value to input_shape variable

input_shape = ____.shape

# Add the first layer 'conv_1': a Conv2D layer with 16 filters, a 3x3 kernel size, a ReLU activation function and 'SAME' padding.

# Ensure the weights are initialised by providing the input_shape argument in the first layer.

model1.add(____(____,(____,____),padding='____', activation='____',input_shape=____,name='____'))

# Add the second layer 'conv_2': a Conv2D layer with 8 filters, a 3x3 kernel size, a ReLU activation function and 'SAME' padding.

model1.add(____(____,(____,____),padding='____', activation='____',input_shape=____,name='____'))

# Add the third layer 'pool_1': a MaxPooling2D layer with a pooling window size of 8x8.

model1.add(____((____,____),name='____'))

# Add the fourth layer 'flatten': a Flatten layer.

model1.add(____(name='____'))

# Add the fifth layer 'dense_1': a Dense layer with 32 units, a ReLU activation.

model1.add(____(____, activation='____',name='____'))

# Add the sixth layer 'dense_2': a Dense layer with 10 units and softmax activation.

model1.add(____(____,activation='____',name='____'))

# Print the model summary

model1.____()

# Or you can build the above model in one go

input_shape = ____.shape

model1 = ____([

____(____,(____,____),padding='____', activation='____',input_shape=____,name='____'),

____(____,(____,____),padding='____', activation='____',input_shape=____,name='____'),

____((____,____),name='____'),

____(name='____'),

____(____, activation='____',name='____'),

____(____,activation='____',name='____')

])

# Print the model summary

model1.____()

❓❓❓ Do you have a model of the following structure?

6.3.4. Q2 Compile model1 with your chosen optimiser, loss function, and an evalutation metric.#

Compile

model1with the Adam optimiser, sparse categorical cross entropy loss function, and a single accuracy metric.

# Compile the model with the Adam optimiser, sparse categorical cross entropy loss function, and a single accuracy metric.

model1.____(optimizer='____',

loss = '____',

metrics = ['____'])

6.3.5. Q3 Evaluate the initial accuracy of model1: Is the initial accuracy of the model as you have expected?#

# Calculate its initialised test accuracy

test_loss, test_acc = model1.____(x=____, y=____, verbose=1)

print('Test accuracy: {acc:0.3f}'.format(acc=test_acc))

❓❓❓ Does your model have a similar initial accuracy & why?

6.3.6. Q4 Train model1 with 15 epochs, store the result in variable ‘history’;#

Store the fitting result in a variable history;

# Fit the model with 15 epochs and store the fitting result in a variable history.

epochs = ____

history = model1.____(____, ____,

epochs=____,

verbose=1,

validation_data=(____, ____))

❓❓❓ Do you have similar print output?

6.3.7. Q5 Evaluate the accuracy of fitted model1, plot training, validation set loss and accuracy, and plot the model using Keras model plotting utilities#

Evaluate the fitted model

What’s the test accuracy after training the model? Does it improve from the initialization?

Plot the model using Keras model plotting utilities

# Calculate the test accuracy

score = model1.____(____, ____, verbose=0)

print('Test accuracy: {acc:0.3f}'.format(acc=score[1]))

❓❓❓ Do you have similar test accuracy?

# Plot training, validation set loss and accuracy

pd.DataFrame(____.____).plot(figsize=(8,5))

plt.show()

❓❓❓ Do you have a similar plot?

# Plot the model using Keras model plotting utilities

____(model1, show_shapes=True, show_layer_names=True)

❓❓❓ Do you have a similar plot?

6.3.8. Q6 Create two callbacks to save the model weights at each epoch and the best validation accuracy epoch#

1.checkpoint_every_epoch: checkpoint that saves the model weights every epoch during training;

2. checkpoint_best_only: checkpoint that saves only the weights with the highest validation accuracy.

# Create a ModelCheckpoint object that:

# - saves the weights only at the end of every epoch

# - saves into a directory called 'checkpoints_every_epoch' inside the current working directory

# - generates filenames in that directory like 'checkpoint_XXX' where

# XXX is the epoch number formatted to have three digits, e.g. 001, 002, 003, etc.

path_every = 'checkpoints_every_epoch/checkpoint_{epoch:03d}'

checkpoint_every_epoch = ____(filepath=____,

frequency=____,

save_weights_only=____,

monitor = ____,

verbose=0)

# Create a ModelCheckpoint object that:

# - saves only the weights that generate the highest validation accuracy

# - saves into a directory called 'checkpoints_best_only' inside the current working directory

# - generates a file called 'checkpoints_best_only/checkpoint'

path_best = 'checkpoints_best_only/checkpoint'

checkpoint_best_only = ____(filepath=____,

frequency=____,

save_weights_only=____,

save_best_only = ____,

monitor = ____,

verbose=1)

6.3.9. Q7 Build a CNN model with the same initial structure as model1 and train for 15 epochs using the callbacks from Q6#

Now, you will train the model using the three callbacks you created. If you created the callbacks correctly, three things should happen:

At the end of every epoch, the model weights are saved into a directory called

checkpoints_every_epochAt the end of every epoch, the model weights are saved into a directory called

checkpoints_best_onlyonly if those weights lead to the highest test accuracyTraining stops when the testing accuracy has not improved in three epochs.

You should then have two directories:

A directory called

checkpoints_every_epochcontaining filenames that includecheckpoint_001,checkpoint_002, etc with the001,002corresponding to the epochA directory called

checkpoints_best_onlycontaining filenames that includecheckpoint, which contain only the weights leading to the highest testing accuracy

# Create a model with the same initial structure as model1 again from scratch

input_shape = X_train[0].shape

model = Sequential([

Conv2D(16,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_1'),

Conv2D(8,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_2'),

MaxPooling2D((8,8),name='pool_1'),

Flatten(name='flatten'),

Dense(32, activation='relu',name='dense_1'),

Dense(10,activation='softmax',name='dense_2')

])

model.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# Train model using the callbacks you just created with 15 epochs

callbacks = [____, ____]

history = model.fit(____, ____, epochs=15, validation_data=(X_val, y_val), ____=____)

❓❓❓ Do you have similar print output?

6.3.10. Q8 Create new models model_last_epoch and model_best_epoch with model1 initial structure; load weights from the latest saved epoch and the saved epoch with the highest validation accuracy respectively#

Now you will use the weights you just saved in a fresh model. You should create two functions, both of which take a freshly instantiated model instance:

model_last_epochshould contain the weights from the latest saved epochmodel_best_epochshould contain the weights from the saved epoch with the highest testing accuracy

Hint: use the tf.train.latest_checkpoint function to get the filename of the latest saved checkpoint file. Check the docs here.

# Create a new CNN with same structure as model1 and compile

input_shape = X_train[0].shape

model_last_epoch = Sequential([

Conv2D(16,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_1'),

Conv2D(8,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_2'),

MaxPooling2D((8,8),name='pool_1'),

Flatten(name='flatten'),

Dense(32, activation='relu',name='dense_1'),

Dense(10,activation='softmax',name='dense_2')

])

model_last_epoch.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# Load on the weights from the last training epoch.

model_last_epoch.____(____)

# Create a new CNN with same structure as model1 and compile

input_shape = X_train[0].shape

model_best_epoch = Sequential([

Conv2D(16,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_1'),

Conv2D(8,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_2'),

MaxPooling2D((8,8),name='pool_1'),

Flatten(name='flatten'),

Dense(32, activation='relu',name='dense_1'),

Dense(10,activation='softmax',name='dense_2')

])

model_best_epoch.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# Load the weights leading to the highest validation accuracy.

# """

model_best_epoch.____(____)

# Verify that the validation accuarcy of the last and the best model.

score = model_last_epoch.evaluate(____, ____, verbose=0)

print('Model validation accuracy with last epoch weights: {acc:0.3f}'.format(acc=score[1]))

print('')

score = model_best_epoch.evaluate(____, ____, verbose=0)

print('Model validation accuracy with best epoch weights: {acc:0.3f}'.format(acc=score[1]))

❓❓❓ Are the saved models’ validation accuracy as expected?

6.3.11. Q9 Explore to improve the model performance by trying to reduce the bias: design and train a model2#

For example, train a CNN called model2, which has the same structure as model1, but with more convolution units:

conv_1has 32 units,conv_2has 64 units,dense_1with 256 units

❓❓❓

Does the model performance improve?

What are other potential strategies to improve model performance by reducing the bias?

# Build a Sequential model model2 with same specification as the previous model1,

# but change the number of filters in the first convolution layer to 32 and second convolution layer to 64, the dense_1 layer to 256 units.

# Ensure the weights are initialised by providing the input_shape argument in the first layer.

input_shape = X_train[0].shape

model2 = Sequential([

____(____,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_1'),

____(____,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_2'),

MaxPooling2D((8,8),name='pool_1'),

Flatten(name='flatten'),

____(____, activation='relu',name='dense_1'),

Dense(10,activation='softmax',name='dense_2')

])

model2.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# Train the model and store the results in a variable called history

history = model2.fit(____, ____, epochs=15, validation_data=(____, ____))

❓❓❓ Are your printed output similar to the following screenshot?

# Calculate the test accuracy

score = model2.____(____, ____, verbose=0)

print('Test accuracy: {acc:0.3f}'.format(acc=score[1]))

❓❓❓ Does your model’s test accuracy improve and why?

6.3.12. Q10 Explore to improve the model performance by trying to reduce the variance: design and train a model3#

For example, train a CNN called

model3, which has the same structure asmodel2, add dropout layers one after the max pooling layer, one before the final dense layer with dropout rate of 0.2.

❓❓❓

Does the model performance improve?

What are other potential strategies to improve model performance by reducing the variance?

# Build a Sequential model model3 with same specification as the previous model2,

# but add two dropout layers - one after the max pooling layer, one before the final dense layer.

# Ensure the weights are initialised by providing the input_shape argument in the first layer.

input_shape = X_train[0].shape

model3 = Sequential([

Conv2D(32,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_1'),

Conv2D(64,(3,3),padding='SAME', activation='relu',input_shape=input_shape,name='conv_2'),

MaxPooling2D((8,8),name='pool_1'),

____(____),

Flatten(name='flatten'),

Dense(256, activation='relu',name='dense_1'),

____(____),

Dense(10,activation='softmax',name='dense_2')

])

model3.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

# Train the model and store the results in a variable called history

history = model3.fit(____, ____, epochs=15, validation_data=(____, ____))

❓❓❓ Are your printed output similar to the following screenshot?

# Calculate the test accuracy

score = model3.____(____, ____, verbose=0)

print('Test accuracy: {acc:0.3f}'.format(acc=score[1]))

❓❓❓ Does your model’s test accuracy improve and why?

6.3.13. Q11 Explore to improve the model performance using transfer learning from pretrained model#

Use pretrained models from Keras Applications vgg16

Does the model performance improve after the above explorations?

How would you further improve the model performance?

from keras.applications import ____

conv_base = ____.____(include_top=False, input_shape = input_shape)

# freeze the weights

for layer in conv_base.layers:

layer.trainable = False

model4 = Sequential([

conv_base,

Flatten(name='flatten'),

Dropout(0.2),

Dense(256, activation='relu',name='dense_1'),

Dropout(0.2),

Dense(10,activation='softmax',name='dense_2')

])

model4.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

history = model4.fit(X_train, y_train, epochs=15, validation_data=(X_val, y_val))

❓❓❓ Are your printed output similar to the following screenshot? It takes a really long time to train …

# Calculate the test accuracy

score = model4.evaluate(X_test, y_test, verbose=0)

print('Test accuracy: {acc:0.3f}'.format(acc=score[1]))

✌✌✌ Congratulations! You have completed this exercise. Now you know how to train CNNs to classify remote sensing images.

Still, the accuracy reported from the Eurosat dataset paper - Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification is 98.57%. ⚡⚡⚡Have you found strategies to improve the performance to that level?